regularization machine learning mastery

This technique prevents the model from overfitting by adding extra information to it. You should be redirected automatically to target URL.

Day 3 Overfitting Regularization Dropout Pretrained Models Word Embedding Deep Learning With R

It is a form of regression.

. You should be redirected automatically to target URL. This is exactly why we use it for applied machine learning. Each regularization method is.

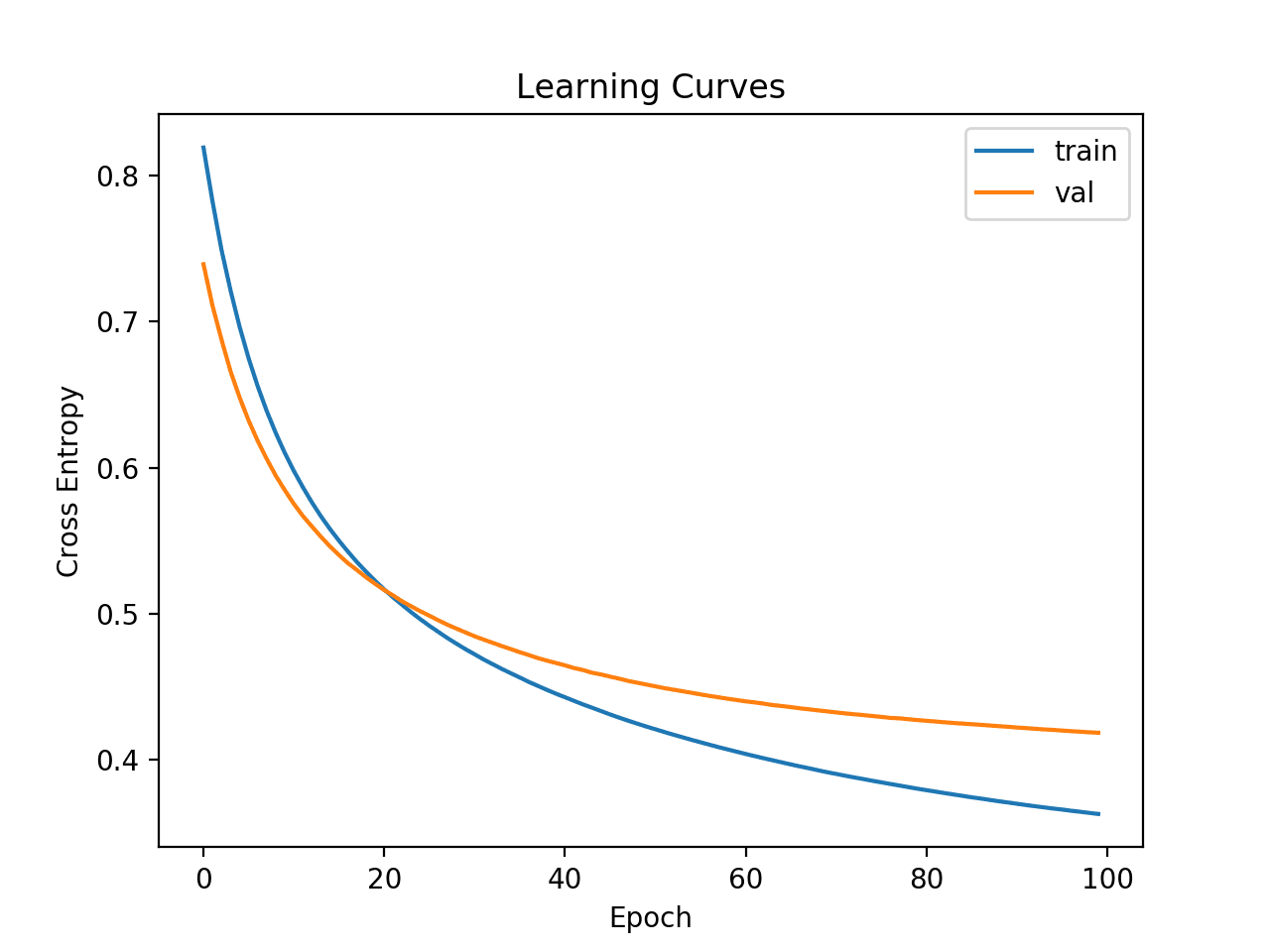

The ways to go about it can be different can be measuring a loss function and. Large weights in a neural network are a sign of a more complex. Regularization works by adding a penalty or complexity term to the complex model.

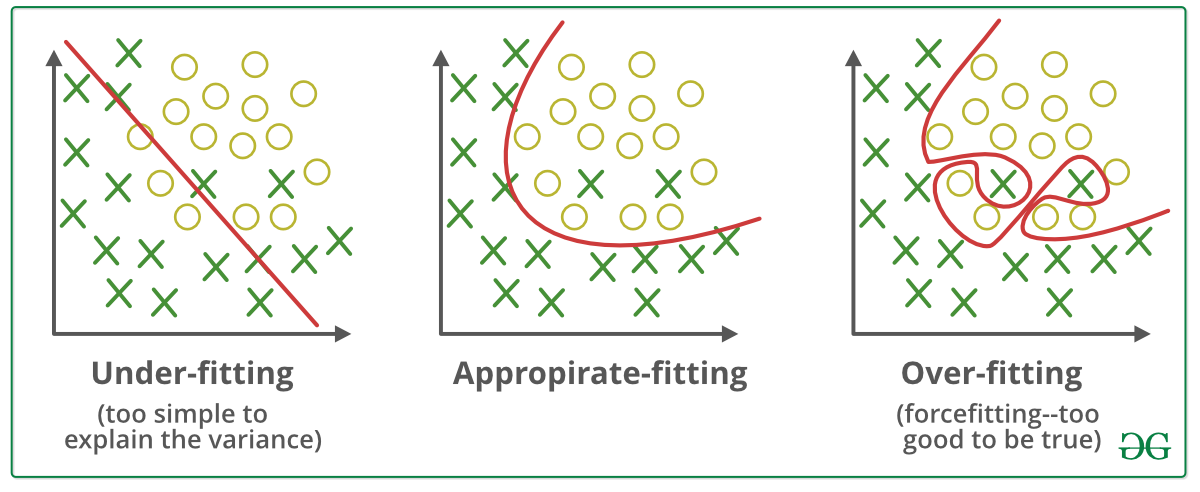

Regularization in machine learning allows you to avoid overfitting your training model. Overfitting happens when your model captures the. I have covered the entire concept in two parts.

Regularization in Machine Learning. Setting up a machine-learning model. L2 regularization or Ridge Regression.

It is not a complicated technique and it simplifies the machine learning process. Regularization Dodges Overfitting. It is often observed that people get confused in selecting the suitable regularization approach to avoid overfitting while training a machine learning model.

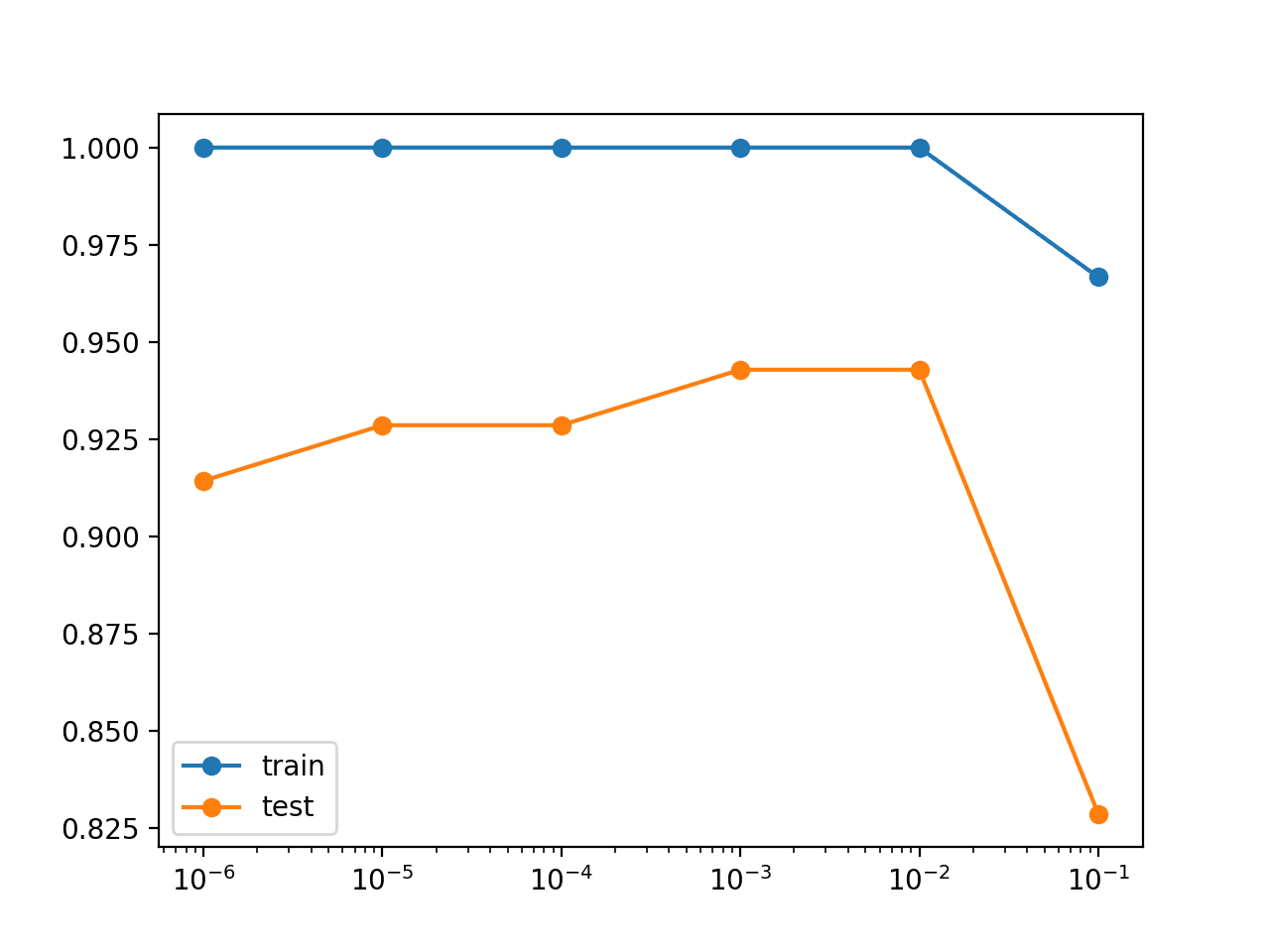

In Python we have a REPL read-eval-print loop that we can run commands line by line. In general regularization means to make things regular or acceptable. In this post you will discover weight regularization as an approach to reduce overfitting for neural networks.

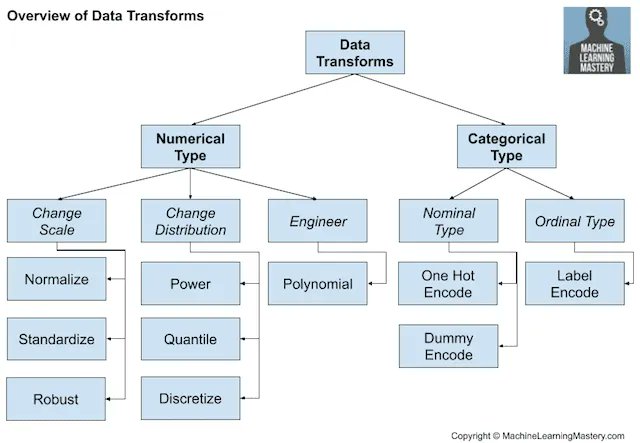

Regularization is one of the basic and most important concept in the world of Machine Learning. Types of Regularization. So the systems are programmed to learn and improve from experience automatically.

Regularization helps us predict a Model which helps us tackle the Bias of the training data. Together with some inspection tools provided by Python it helps us to develop codes. Regularization is essential in machine and deep learning.

Part 1 deals with the theory. Lets consider the simple linear regression equation. It is one of the most important concepts of machine learning.

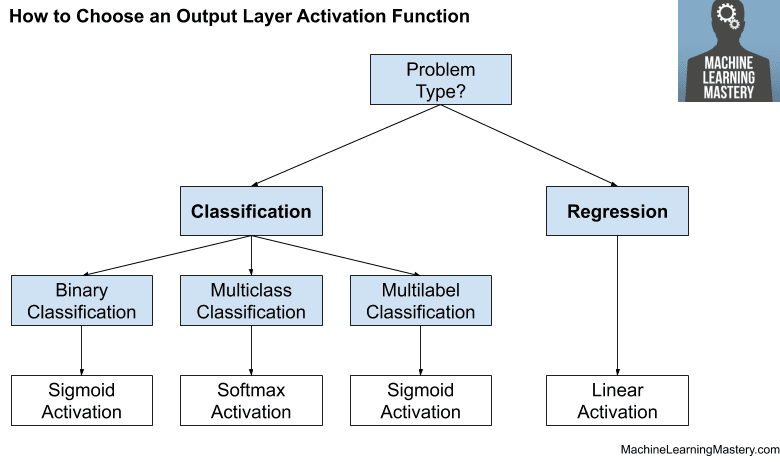

Based on the approach used to overcome overfitting we can classify the regularization techniques into three categories. Regularization is a process of introducing additional information in order to solve an ill-posed problem or to prevent overfitting Basics of Machine Learning Series Index The. A regression model that uses L1 regularization technique is called Lasso Regression and model which uses L2 is called Ridge.

In the context of machine learning.

Cheatsheet Machinelearningmastery Favouriteblog Com

Machine Learning Mastery With Weka Pdf Machine Learning Statistical Classification

![]()

Start Here With Machine Learning

Day 3 Overfitting Regularization Dropout Pretrained Models Word Embedding Deep Learning With R

What Is Regularization In Machine Learning

Hendro Subagyo Hendrosubagyo Twitter

Your First Deep Learning Project In Python With Keras Step By Step

![]()

Machine Learning Mastery Workshop Enthought Inc

Issue 4 Out Of The Box Ai Ready The Ai Verticalization Revue

How To Choose An Evaluation Metric For Imbalanced Classifiers Class Labels Machine Learning Probability

Machine Learning Mastery Jason Brownlee Machine Learning Mastery With Python 2016

Regularization In Machine Learning And Deep Learning By Amod Kolwalkar Analytics Vidhya Medium

How To Improve Performance With Transfer Learning For Deep Learning Neural Networks

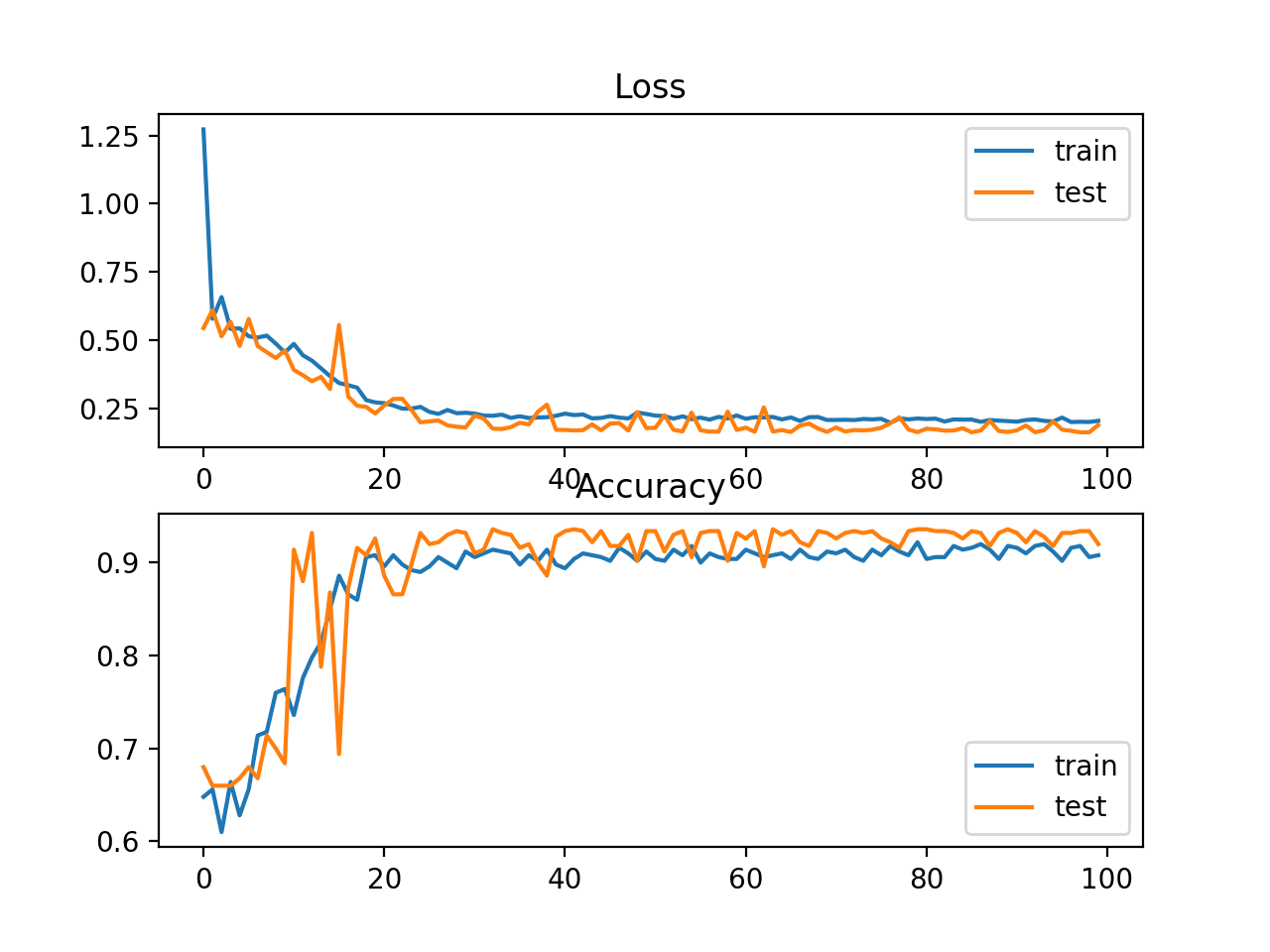

Weight Regularization With Lstm Networks For Time Series Forecasting

Support Vector Machines For Machine Learning

Apa Itu Regularisasi Dalam Pembelajaran Mesin Quora